Articles

- Page Path

- HOME > J Educ Eval Health Prof > Volume 20; 2023 > Article

-

Research article

Students’ performance of and perspective on an objective structured practical examination for the assessment of preclinical and practical skills in biomedical laboratory science students in Sweden: a 5-year longitudinal study -

Catharina Hultgren1*

, Annica Lindkvist2

, Annica Lindkvist2 , Sophie Curbo1

, Sophie Curbo1 , Maura Heverin3

, Maura Heverin3

-

DOI: https://doi.org/10.3352/jeehp.2023.20.13

Published online: April 6, 2023

1Division of Clinical Microbiology, Department of Laboratory Medicine, ANA Futura, Karolinska Institutet, Stockholm, Sweden

2Division of Clinical Immunology, Department of Laboratory Medicine, ANA Futura, Karolinska Institutet, Stockholm, Sweden

3Division of Clinical Chemistry, Department of Laboratory Medicine, ANA Futura, Karolinska Institutet, Stockholm, Sweden

- *Corresponding e-mail: catharina.hultgren@ki.se

© 2023 Korea Health Personnel Licensing Examination Institute

This is an open-access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

- 1,262 Views

- 113 Download

Abstract

-

Purpose

- It aims to find students’ performance of and perspectives on an objective structured practical examination (OSPE) for assessment of laboratory and preclinical skills in biomedical laboratory science (BLS). It also aims to investigate the perception, acceptability, and usefulness of OSPE from the students’ and examiners’ point of view.

-

Methods

- This was a longitudinal study to implement an OSPE in BLS. The student group consisted of 198 BLS students enrolled in semester 4, 2015–2019 at Karolinska University Hospital Huddinge, Sweden. Fourteen teachers evaluated the performance by completing a checklist and global rating scales. A student survey questionnaire was administered to the participants to evaluate the student perspective. To assess quality, 4 independent observers were included to monitor the examiners.

-

Results

- Almost 50% of the students passed the initial OSPE. During the repeat OSPE, 73% of the students passed the OSPE. There was a statistically significant difference between the first and the second repeat OSPE (P<0.01) but not between the first and the third attempt (P=0.09). The student survey questionnaire was completed by 99 of the 198 students (50%) and only 63 students responded to the free-text questions (32%). According to these responses, some stations were perceived as more difficult, albeit they considered the assessment to be valid. The observers found the assessment protocols and examiner’s instructions assured the objectivity of the examination.

-

Conclusion

- The introduction of an OSPE in the education of biomedical laboratory scientists was a reliable, and useful examination of practical skills.

- Background/rationale

- Biomedical laboratory scientists (BLS) work in different clinical laboratories (such as chemistry, microbiology, and transfusion medicine), but also within for example research laboratories and pharmaceutical companies. They perform a wide range of laboratory assays on tissue samples, blood, and body fluids which are crucial for the health sector and today approximately 60% to 70% of all diagnoses given are based on part of the analyses performed by a BLS [1]. BLS is today a licensed health profession in many countries and the core competencies includes carrying out laboratory work, analysis, and assessment [2]. The emphasis is on validation and quality assurance [3]. When practical skills are examined, the assessment is frequently unreliable and largely dependent on the examiners’ training [4]. An early innovation to improve practical evaluation is the objective structured clinical examination (OSCE) which later was extended to the practical examination, objective structured practical examination (OSPE) described in 1975 and in greater detail in 1979 by Harden and his group from Dundee [5]. It has been found to be objective, valid, and reliable. We have not found any publication where an OSPE is used as an assessment tool to evaluate BLS students’ all competencies. However, it has been used in other fields such as pharmacology and pathology [6,7]. For the assessment of BLS competencies, an OSPE can be designed to test various skills, for example, (1) general laboratory skills such as choice and handling of equipment/accessories, (2) interpretation of laboratory results, conclusions, (3) specific laboratory techniques but also (4) preclinical skills such as sampling techniques, communication, and attitude. For this purpose, an agreed checklist, instructor’s manual, and response questions are used regarding the above-mentioned aspects for the evaluation of students’ competencies. The observer evaluates the students according to a checklist and instructor’s manual provided.

- Objectives

- The purpose of this study was to find students’ performance of and perspective on OSPE as an assessment tool for the examination of practical and preclinical skills in biomedical laboratory medicine for second-year BLS students. The specific research questions were to (1) study the perception, (2) acceptability, and (3) usefulness of OSPE from the students’ and examiners’ point of view through survey-questionnaire. We hypothesized that students’ passing rates would increase after participation in a previous OSPE.

Introduction

- Ethics statement

- Ethical approval was not required for this study, as per the Swedish Ethical Review Authority tool. This study did not include a clinical trial and did not collect any personal data. Participation in the survey was optional for the participants, and only anonymous data were included.

- Study design

- This was a 5-year retrospective longitudinal study involving biomedical laboratory science students. It is described according to the STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) statement (https://www.strobe-statement.org/).

- Setting

- The study was conducted over a 5-year period (spring 2015 to 2019) at Karolinska University Hospital Huddinge, Sweden. The OSPE implementation is available from Supplements 1 and 2.

- Participants

- Biomedical laboratory science students enrolled in the Biomedical laboratory science program during semester 4 at Karolinska Institutet, Stockholm, Sweden. The inclusion criteria were all students (n=198) who were supposed to take part in the OSPE. Exclusion criteria were incomplete data (e.g., students who dropped out and did not take part in the examination). A total of 195 students participated and 3 did not and these were therefore excluded. If the students passed all intended learning outcomes (ILOs), they passed the OSPE and did not take part in a rerun. Details on the implementation of the OSPE are available from Supplement 1. During the second OSPE, 96 students participated and during the third OSPE, 26 students participated (Fig. 1). The student surveys were performed after the completion of the first OSPE, but before the students were notified of the result from the first exam. A total of 99 students (50%) agreed to complete the questionnaire.

- Variables

- Passing rate of OSPE is the primary outcome.

- Data sources/measurement

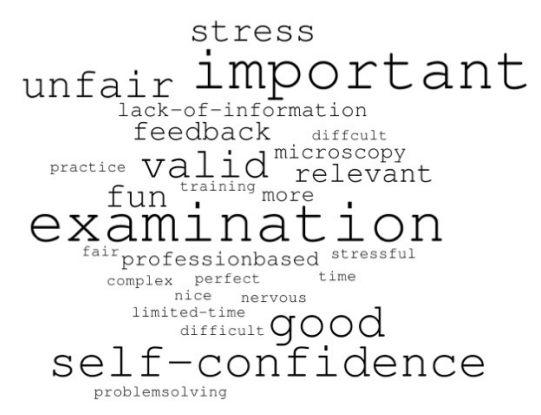

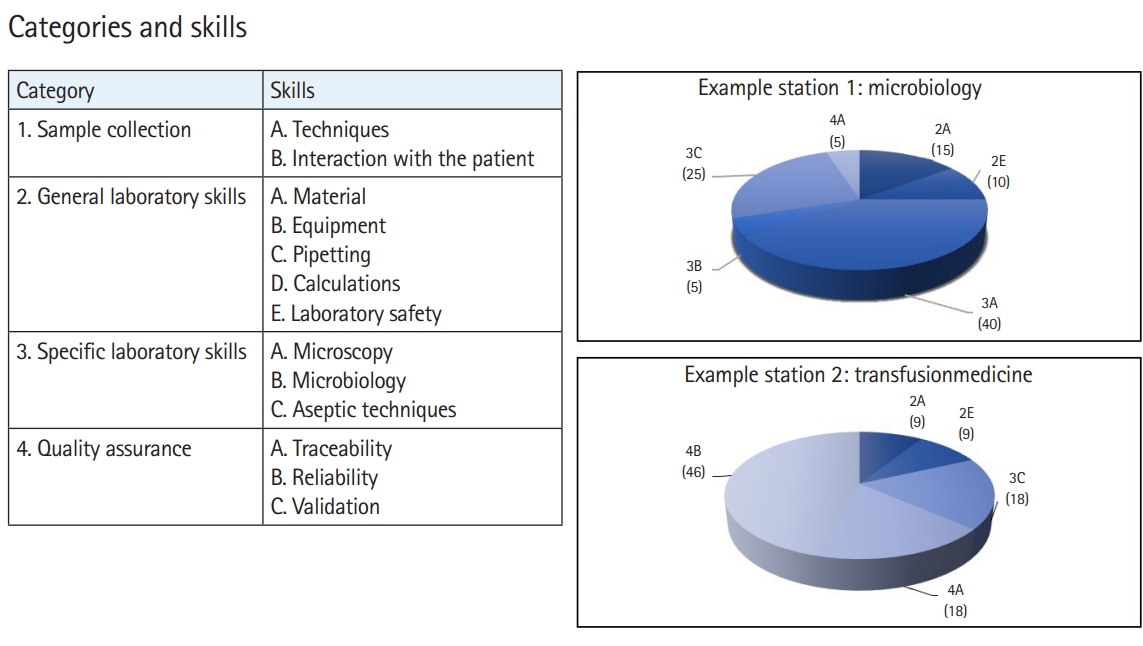

- Students’ performance data were generated after each OSPE (Dataset 1). Which ILOs that were assessed are depicted in Fig. 2. Furthermore, the perceptions of the examiners were evaluated both by 4 independent observers of test situations and by interviews with 5 of the participating examiners. The student perception was evaluated using anonymous voluntary survey forms with free text questions (Supplement 3). The free-text questions were further analyzed by grouping words according to resemblance and performing a word cloud (Fig. 3). Participants’ responses are available from Dataset 2.

- Bias

- No notifiable bias can be detected because most target students (195/198) participated in the study. Since participation in the survey questions was voluntary, there may be some bias in this aspect.

- Study size

- The sample size was not estimated since most target students were enrolled.

- Statistical methods

- Data were analyzed using Excel ver. 2016 (Microsoft Corp.) and Prism 9 (version 9.5.0, Graph Pad Software). Descriptive statistics summarized the distribution and frequency of pass rates. Comparisons between groups were made by one-way analysis of variance followed by post hoc Tukey’s test. Significance was set at a=0.05.

Methods

- Participants

- A total of 195 students (80,5% female) completed the OSPE examination and of these 99 also completed the student survey (response rate=50%). Table 1 displays the students’ characteristics. As examiners, 14 teachers participated and finally, 4 different teachers participated as quality observers during 2016–2019.

- Main results

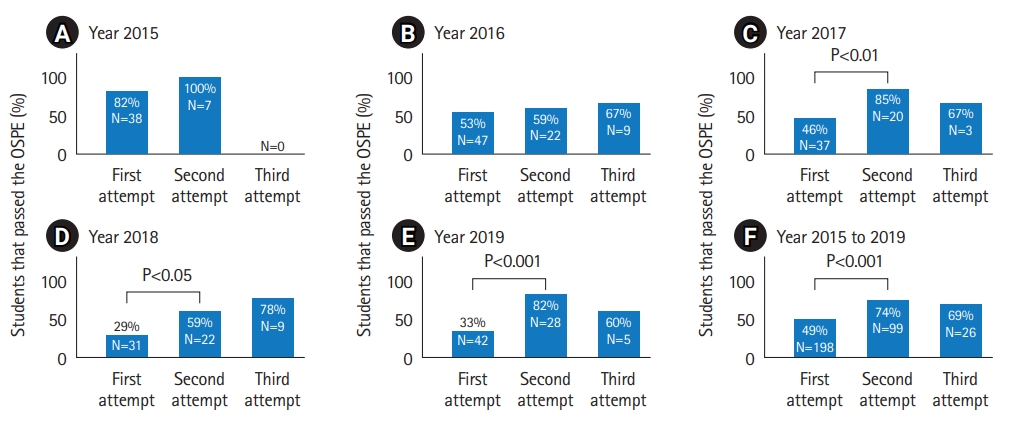

- During the first OSPE, the success rate in the OSPE varied between 29 and 81.8%, and in total 99 students failed 1 or more ILOs during the first OSPE (Fig. 4A–E). In total between 2015 and 2019, from the first OSPE run, there was a success rate of 49.2% (Fig. 4F). Among the students that participated in the second attempt, a much higher success rate was achieved (varying between 59.1% to 100%; mean 73.7%) that is 73 students passed the OSPE and only 26 failed (Fig. 4). Finally, a third OSPE run was performed (except for 2015), with the remaining 26 students and then 17 students managed to pass all ILOs and 9 failed to do so, that is a somewhat lower success rate of (varying between 60% to 77.8%; mean 65.4%) (Fig. 4). There was a statistically significant difference between the first and the second repeat OSPE (P<0.01) during 2017 to 2019, but not during the first 2 years. Nor was there any statistically significant difference between the first and the third attempt (Fig. 4). The OSPE assessed 4 different competencies (Supplement 1), sample collection, general and specific laboratory skills, and quality assurance. The highest Likert score (pass) varied between 44.1% to 95.9% for the individual stations; with a mean of 68.9% for all stations (Dataset 1).

- The voluntary student survey form was completed by 99 of the 198 students (50%) and only 63 students responded to the free-text questions (32%). In general, their opinions in the free-text questions were either defined as clearly positive or negative towards the OSPE, as is summarized in the word cloud in Fig. 3. These opinions ranged from positive reflections such as “fun and valid examination” and more negative reflections mentioning stress and the examination to be unfair.

- Regarding their different stations, most of the examiners agreed that the examination was useful and well-organized and that the tasks that the students were asked to perform at each station were fair, and the OSPE was a standardized examination for the assessment of preclinical and laboratory skills. Though some of the examiners’ mentioned that the OSPE might have unwanted effects on student behavior, such as just trying to “pass” the station rather than carefully carrying out the task they were asked to perform. The examiners also addressed the importance of agreed guidelines and thereby new and more specific guidelines could be included. The semi-structured interview questions are available from Supplement 4. Finally, some of the examiners stated that they would need more time for training on beforehand.

- The observers found a certain degree of subjectivity among the examiners, especially when the beforehand training was stated as insufficient by the examiners. They found that guidelines for the examiners were a key factor for objectivity of the assessment. With proper and agreed checklists they found the OSPE to a relevant assessment to discriminate between good and not so good performers and speculated that the variability that could be observed between examiners could be reduced with training.

Results

Students’ performance of the OSPE

Students’ perception of the OSPE

Examiners’ perception of the OSPE

Quality observers’ point of view

- Key results

- The aim was to find students’ performance and perspective on OSPE as an assessment tool for the examination of practical and preclinical skills for second-year BLS students. Almost 50% of the students passed the initial OSPE and during the repeat OSPE, 73% of the students passed. According to the students that responded to the survey some stations were perceived as more difficult, albeit they considered the assessment to be relevant. The observers found the assessment protocols and examiner’s instructions assured the objectivity of the examination.

- Interpretation

- There was a higher success rate during 2015/2016 (Fig. 4A, B) versus 2017, 2018, and 2019 (Fig. 4C–E). Also, the higher pass rate from the first OSPE in 2015 was statistically significant when compared to all other first OSPE occasions (with P-values <0.01, <0.5, <0.01, and <0.001, respectively). We speculate that the main reason for this is better checklists and training of the examiners. It has been shown that the assessment method influences and drives student learning [8,9]. Thus, assessing different components such as performing laboratory tests, analyzing, and interpreting laboratory data would drive students to learn these competencies. This was supported by the finding that the students performed better in the second OSPE (P<0.01). In addition, the frequency of passed examinations was higher also in the third OSPE, although this difference was not statistically significant.

- Most of the free-text answers reflected that they found the examination to be relevant for their future profession and in some cases, even an opportunity to practice what they have learned. The main negative opinions stated in the survey were lack of time, stress, and the examination being unfair. Some mentioned the stations being easy versus others being hard. A notion that aligns with the fact that the Likert rating also varied between the different stations, whether this has an impact on the summative result of the OSPE remains to be clarified. Though, since only 50% of the participants did respond to the survey and only 32% gave free-text answers these do not mirror the reflections of all students, possibly more of those that were either very content or discontented with the OSPE. These feelings were though uninfluenced by their exam results since the survey was performed before the students were notified of their exam results.

- In general, the examiners had a positive perception of the usefulness of the OSPE, but also stated some comments that were important to address such as the need for beforehand training and agreed checklists.

- There is some evidence suggesting that examiner training and the use of different examiners for different stations reduce examiner variation in scoring and individual assessor bias [10]. To reduce examiner variation and assessor bias different examiners and some rotation between stations were applied. This also highlighted the importance of agreed checklists, instructor’s manuals, and premade response questions and the notion from examiners for the need for training beforehand.

- Comparison with previous studies

- This study agrees with the objective structured laboratory examination (OSLE) that has been previously used to assess practical competencies achieved by students in a lab-based program (undergraduate biomedical science program) in Malaysia and was there perceived to be useful both by students and by faculty though the OSLE is similar to the OSPE, in the OSPE also preclinical skills such as sampling of blood (including communication) is included [11]. The notion that the student performed better during their second attempt in the OSPE is in line with previous studies stating that there is a strong positive correlation between students’ performance on in-training examinations and their final OSCE result [12]. It is also in concordance with previous literature supporting the use of OSPE as a well-accepted assessment tool for practical competencies according to both students and examiners [13].

- Limitations and generalizability

- There are limitations in the analysis of the student perspective in this study, such as the fact that only 32% of the students provided reflections in free-text form, and thereby these data do not represent the perspectives of all students. Most of the students that did answer the free text form either expressed rather strong negative or positive attitudes. The study used a global rating system and is therefore also limited in its generalizability. Considering the results of this study, the OSPE can be applied in BLS to assess both preclinical and laboratory competencies and these results may apply to BLS students in other institutes in Sweden.

- Suggestions

- Further research should investigate whether a different scaling system influences the outcome, and more easily can differ between a borderline failure and a borderline pass. To determine how the cut score changes when the scale changes. In this setting, there were 4 ILOs covered. It is also necessary to study how the cut score changes depending on the content and combination of the stations [14].

- Since one of the major drawbacks in the students’ perception was stress and other measures to address this issue should be taken. One possible way to reduce stress and make them more familiar with the OSPE would be to participate in a peer-led OSPE [15].

- Conclusion

- This study demonstrates that the OSPE provides an opportunity to test a student’s ability to integrate knowledge and preclinical and practical skills that are a must for any student aspiring to become a successful BLS. From this study, it can be concluded that the introduction of an OSPE in the education in BLS was a practical and useful examination of practical competencies.

Discussion

Students’ performance of the OSPE

Students’ perception of the OSPE

Examiners’ perceptaion of the OSPE

Quality observers’ point of view

-

Authors’ contributions

Conceptualization: CH. Data curation: CH, AL, SC, MH. Methodology/formal analysis/validation: CH, AL, MH. Project administration: CH. Funding acquisition: CH. Writing–original draft: CH. Writing–review & editing: CH, AL, SC, MH.

-

Conflict of interest

No potential conflict of interest relevant to this article was reported.

-

Funding

This work was supported by grants provided by Karolinska Institutet (funding no., 20180758). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

-

Data availability

Data files are available from Harvard Dataverse: https://doi.org/10.7910/DVN/JDK6GM

Dataset 1. Raw data from OSPE by year.

-

Dataset 2. Students’ response to OSPE and survey questionnaire by year.

Article information

Acknowledgments

Supplementary materials

- 1. Olver P, Bohn MK, Adeli K. Central role of laboratory medicine in public health and patient care. Clin Chem Lab Med 2022;61:666-673. https://doi.org/10.1515/cclm-2022-1075 ArticlePubMed

- 2. Stollenwerk MM, Gustafsson A, Edgren G, Gudmundsson P, Lindqvist M, Eriksson T. Core competencies for a biomedical laboratory scientist: a Delphi study. BMC Med Educ 2022;22:476. https://doi.org/10.1186/s12909-022-03509-1 ArticlePubMedPMC

- 3. Scanlan PM. A review of bachelor’s degree medical laboratory scientist education and entry level practice in the United States. EJIFCC 2013;24:5-13. PubMedPMC

- 4. Yeates P, O’Neill P, Mann K, W Eva K. ‘You’re certainly relatively competent’: assessor bias due to recent experiences. Med Educ 2013;47:910-922. https://doi.org/10.1111/medu.12254 ArticlePubMed

- 5. Khan KZ, Ramachandran S, Gaunt K, Pushkar P. The objective structured clinical examination (OSCE): AMEE guide no. 81. Part I: an historical and theoretical perspective. Med Teach 2013;35:e1437-e1446. https://doi.org/10.3109/0142159X.2013.818634 ArticlePubMed

- 6. Malhotra SD, Shah KN, Patel VJ. Objective structured practical examination as a tool for the formative assessment of practical skills of undergraduate students in pharmacology. J Educ Health Promot 2013;2:53. https://doi.org/10.4103/2277-9531.119040 ArticlePubMedPMC

- 7. Prasad HLK, Prasad HVK, Sajitha K, Bhat S, Shetty KJ. Comparison of objective structured practical examination (OSPE) versus conventional pathology practical examination methods among the second-year medical students: a cross-sectional study. Med Sci Educ 2020;30:1131-1135. https://doi.org/10.1007/s40670-020-01025-9 ArticlePubMedPMC

- 8. Young M, St-Onge C, Xiao J, Vachon Lachiver E, Torabi N. Characterizing the literature on validity and assessment in medical education: a bibliometric study. Perspect Med Educ 2018;7:182-191. https://doi.org/10.1007/s40037-018-0433-x ArticlePubMedPMC

- 9. Kickert R, Stegers-Jager KM, Meeuwisse M, Prinzie P, Arends LR. The role of the assessment policy in the relation between learning and performance. Med Educ 2018;52:324-335. https://doi.org/10.1111/medu.13487 ArticlePubMed

- 10. Khan KZ, Gaunt K, Ramachandran S, Pushkar P. The objective structured clinical examination (OSCE): AMEE guide no. 81. Part II: organisation & administration. Med Teach 2013;35:e1447-e1463. https://doi.org/10.3109/0142159X.2013.818635 ArticlePubMed

- 11. Chitra E, Ramamurthy S, Mohamed SM, Nadarajah VD. Study of the impact of objective structured laboratory examination to evaluate students’ practical competencies. J Biol Educ 2022;56:560-569. https://doi.org/10.1080/00219266.2020.1858931 Article

- 12. Al Rushood M, Al-Eisa A. Factors predicting students’ performance in the final pediatrics OSCE. PLoS One 2020;15:e0236484. https://doi.org/10.1371/journal.pone.0236484 ArticlePubMedPMC

- 13. Mard SA, Ghafouri S. Objective structured practical examination in experimental physiology increased satisfaction of medical students. Adv Med Educ Pract 2020;11:651-659. https://doi.org/10.2147/AMEP.S264120 ArticlePubMedPMC

- 14. Homer M, Russell J. Conjunctive standards in OSCEs: the why and the how of number of stations passed criteria. Med Teach 2021;43:448-455. https://doi.org/10.1080/0142159X.2020.1856353 ArticlePubMed

- 15. Bevan J, Russell B, Marshall B. A new approach to OSCE preparation: PrOSCEs. BMC Med Educ 2019;19:126. https://doi.org/10.1186/s12909-019-1571-5 ArticlePubMedPMC

References

Figure & Data

References

Citations

KHPLEI

KHPLEI

PubReader

PubReader ePub Link

ePub Link Cite

Cite