Emergency medicine and internal medicine trainees’ smartphone use in clinical settings in the United States

Article information

Abstract

Purpose:

Smartphone technology offers a multitude of applications (apps) that provide a wide range of functions for healthcare professionals. Medical trainees are early adopters of this technology, but how they use smartphones in clinical care remains unclear. Our objective was to further characterize smartphone use by medical trainees at two United States academic institutions, as well as their prior training in the clinical use of smartphones.

Methods:

In 2014, we surveyed 347 internal medicine and emergency medicine resident physicians at the University of Utah and Brigham and Women’s Hospital about their smartphone use and prior training experiences. Scores (0%–100%) were calculated to assess the frequency of their use of general features (email, text) and patient-specific apps, and the results were compared according to resident level and program using the Mann-Whitney U-test.

Results:

A total of 184 residents responded (response rate, 53.0%). The average score for using general features, 14.4/20 (72.2%) was significantly higher than the average score for using patient-specific features and apps, 14.1/44 (33.0%, P<0.001). The average scores for the use of general features, were significantly higher for year 3–4 residents, 15.0/20 (75.1%) than year 1–2 residents, 14.1/20 (70.5%, P=0.035), and for internal medicine residents, 14.9/20 (74.6%) in comparison to emergency medicine residents, 12.9/20 (64.3%, P= 0.001). The average score reflecting the use of patient-specific apps was significantly higher for year 3–4 residents, 16.1/44 (36.5%) than for year 1–2 residents, 13.7/44 (31.1%; P=0.044). Only 21.7% of respondents had received prior training in clinical smartphone use.

Conclusion:

Residents used smartphones for general features more frequently than for patient-specific features, but patient-specific use increased with training. Few residents have received prior training in the clinical use of smartphones.

INTRODUCTION

Physicians have used mobile devices in clinical care for the last two decades [1-4]. Portable digital assistant devices (PDAs) were among the first widely adopted hand-held mobile devices, used by 15% of physicians in 1999 and by 60% in 2005 [1]. In the late 2000s PDAs were replaced by smartphones, and by 2011 the prevalence of clinical smartphone use by physicians in Accreditation Council for Graduate Medical Education (ACGME) programs in the United States was estimated at 88% [3]. As of 2013, smartphone technology provided access to more than 100,000 medical applications (apps) on the two main mobile device software platforms (iOS, Apple, Cupertino, CA, USA; Android, Google, Mountain View, CA, USA), 15% of which were directed at healthcare professionals [5]. These apps have functionality ranging from providing access to medical reference materials to electronic medical records and population health surveillance tools [1,3,5,6]. As of 2013, the United States Food and Drug Administration regulates apps that are used as an accessory to a medical device or to transform a mobile device into a regulated medical device (e.g., a phone used to record echocardiograms) [7]. However, the great majority of medical apps used by medical professionals remain unregulated. Several studies have further characterized the clinical applications of smartphones, including information gathering, communication between providers, and tracking usage by interns [8-10]. One study highlighted the perils of smartphone use during inpatient rounds, including distraction [11]. More general descriptions of smartphone use exist, including surveys of medical students and junior physicians in the United Kingdom and Ireland, and a study of clinical app usage by ACGME trainees noted different rates of adoption of smartphone use among specialties [3,4,12]. These studies have documented a rising rate of clinical adoption, including increasing opportunities for general and personal use, but no recent analysis of the general usage of smartphones by the United States medical trainees has been carried out.

Internal medicine trainees comprise the largest category of physicians in residency training in the United States [13]. While emergency medicine resident physicians provide care for medical conditions similar to those encountered in internal medicine, they do so in a different work environment, without the same continuity of care or participation in outpatient clinics [14,15]. The characteristics of these specialties allow for a unique comparison of smartphone use. In this study of two academic medical centers in the United States, we surveyed trainees in internal medicine and emergency medicine about their use of smartphones in clinical care with the objective of characterizing current clinical smartphone use, comparing patterns of usage between these two specialties, and identifying the frequency of prior training in smartphone utilization.

METHODS

Participants

In order to be eligible for survey inclusion, residents (postgraduate medical trainees) had to be in an internal medicine or emergency medicine program at the University of Utah or Brigham and Women’s Hospital in 2014.

Data collection

In March 2014, residency program directors sent their residents an email with a link to the smartphone survey, which was administered via SurveyMonkey (Palo Alto, CA, USA). This was a convenience sample and the survey remained open until June 2014. After consenting to the study, residents indicated if they used a smartphone during clinical practice. If they did not own or use a smartphone, the survey ended.

Smartphone survey

Following a review of the current literature on smartphone use by clinicians, an online survey was developed in December 2013 to capture demographic data, ownership of smartphones and clinical apps (either free or paid), use of clinical apps, and prior training experience. The survey questions were pilot-tested with 10 resident physicians and faculty to refine the final survey by revising the features and apps included and clarifying the phrasing of some questions. Participants indicated monthly smartphone use on a four-point Likert scale where 1=once per month, 2=once per week, 3=once per day or every couple days, and 4=several times per day. They also indicated their usage of 16 features and apps on a five-point Likert scale, which was the same as the monthly use scale with the exception of a score of 0 indicating never.

Statistical analyses

All data were analyzed with IBM SPSS ver. 22.0 (IBM Co., Armonk, NY, USA). Frequencies and percentages were calculated for all demographic survey items. Frequencies and percentages were also calculated for the number of medical apps, monthly smartphone use overall, the use of 16 particular features and apps, and prior training. The 16 features and apps were categorized as general features used for clinical practice (calendar or schedule, communication via text messages with other physicians, e-mail access) and patient-specific features and apps (all other items). A general features percentage score was created by summing the usage rating for the five general feature items and dividing by the total possible score of 20, with higher scores indicating higher use. A patient-specific percentage score was created by summing the usage ratings for the 11 types of features and apps specific to patient care and dividing by 44, with higher scores indicating higher use. In order to determine if monthly overall use, the use of general features, and the use of patient-specific features and apps varied by subgroups, we compared monthly use ratings, general features percentage scores, and patient-specific features/apps percentage scores between age groups (under 30 years of age vs. over 30 years of age), gender, program type (emergency medicine vs. internal medicine), and stage of training (postgraduate year 1–2 vs. postgraduate year 3–4) with the Mann-Whitney U test. General feature scores and patient-specific features/app scores were compared using the Wilcox signed-rank test.

Ethical approval

This study was exempted by the institutional review board of the University of Utah School of Medicine and the Partners Human Research Committee associated with Brigham and Women’s Hospital.

RESULTS

Participant demographics

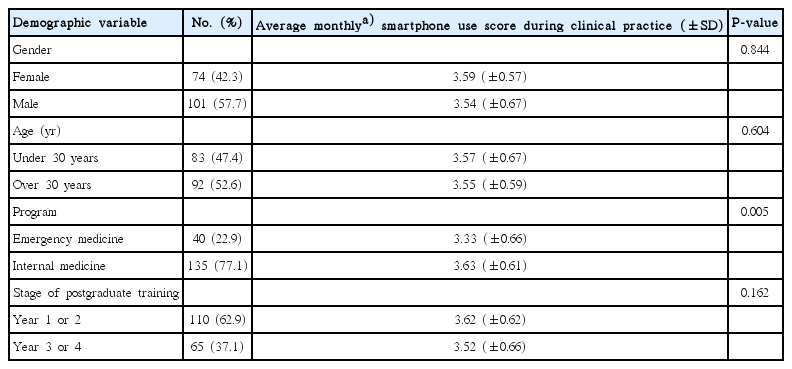

A total of 184 residents responded to the survey, of whom 141 were internal medicine residents and 43 were emergency medicine residents (response rates, 53.6% and 51.2%, respectively). A large majority of the responders (95.7%, n=176), including 136 internal medicine residents and 40 emergency medicine residents, indicated that they use a smartphone during clinical practice. Table 1 provides demographic information about the 175 smartphone users (one participant was omitted due to not completing the survey). Of the respondents who reported use during clinical practice, the majority of residents owned 1–10 medical apps (n=130, 74.3%), followed by 11–20 medical apps (n=28, 16.0%). Only 6.3% (n=11) owned 21–30 medical apps and 2.9% (n=5) owned more than 30 medical apps, and one resident did not own any medical apps.

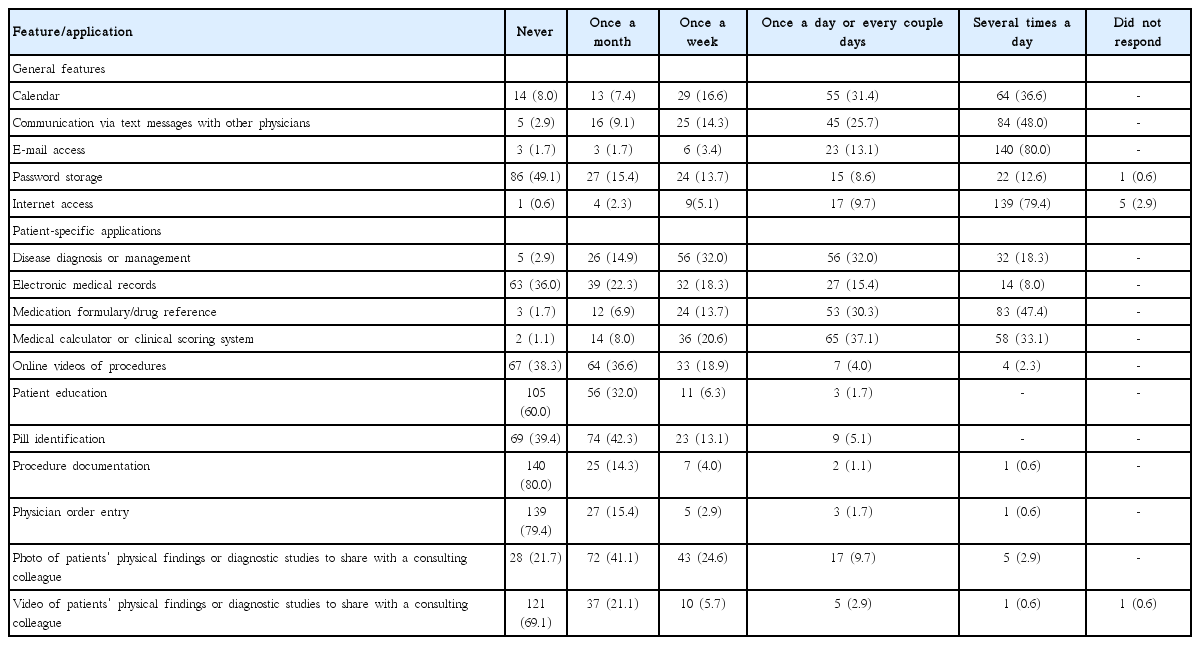

Use of smartphone features and apps

On a monthly basis, the majority of residents used their smartphone several times per day (n=109, 62.3%) followed by once per day or every couple of days (n=53, 30.3%). A smaller percentage used their smartphone once per week (n=10, 5.7%) or once per month (n=1, 0.6%). Two residents (1.1%) did not respond to the question about monthly use. The average monthly use scores are presented in Table 1. The average monthly use score was 3.56 (standard deviation=0.63). Monthly use did not vary significantly by gender, age group, and training level. Internal medicine residents used their smartphone during clinical practice significantly more than emergency medicine residents (P=0.005). Table 2 shows the frequency of smartphone use during clinical practice for the five general features and the 10 patient-specific features and apps. When residents used their smartphone during clinical practice, they frequently used it for general features, such as email or web access. The most frequently used patient-specific features and apps were medical calculators or clinical scoring systems, disease diagnosis or management, and electronic medical records. Table 3 provides the scores for the use of general features and patient-specific features and apps by demographic characteristics. The average score for the use of general features, 14.4/20 (72.2% was significantly higher than the average score for patient-specific features and apps score, 14.6/44 (33.2%, P<0.001). The scores reflecting the use of general features were significantly higher for year 3–4 residents, 14.9/20 (74.6%) than year 1–2 residents (P=0.035) and for internal medicine residents, 14.9/20 (74.6%) compared to emergency medicine residents 12.9/20 (64.3%, P=0.001). Patient-specific app scores were significantly higher for year 3–4 residents, 16.1/44 (36.5%) than for year 1–2 residents 13.7/44 (31.1%, P=0.044). All other comparisons did not yield significant results.

Frequency of usage of general and patient-specific features and applications during clinical practice among 175 residents

Prior training experiences with smartphone apps

Of the survey responders who used smartphones, only 21.7% (n=38) had received some kind of prior training with clinical apps in lectures, conferences, or seminars. Approximately half of the residents (n=85) were interested in receiving formal training, including 17 residents who had already received prior training.

DISCUSSION

Our study presents the findings of an online survey about residents’ smartphone use in two major academic centers. The descriptive results suggest that although the study participants have adopted smartphone technology, their clinical use of smartphones mostly involved general features (email, web access, and text communication), with less frequent use of patient-specific apps (medical calculators or clinical scoring systems, disease diagnosis or management, and electronic medical records). Other studies have highlighted the use of general features in clinical care [9,10], but we were surprised by the degree to which residents used general features in clinical care more frequently than patient-specific apps. We hypothesize that this may be due to residents using laptops on wheels and tablets at the patient’s bedside and/or desktops in clinical workspaces to access patient-specific resources such as clinical calculators and electronic medical records. Since our study only focused on smartphone use, we are unable to characterize the other options that residents had to access these resources and their use thereof. However, due to the constant demands placed on residents during clinical activities, they may switch among these various modalities. Since residents use these resources to learn and provide care, further investigation into how these resources are utilized could yield areas for focused teaching to improve the efficiency and quality of care.

We found interesting differences between the frequency and types of usage of internal medicine residents and emergency medicine residents. Internal medicine residents used smartphones more often during clinical care than emergency medicine residents. The survey responses showed a higher rate of use of general features by internal medicine residents, including texting and email, which have previously been highlighted as important modes of communication for the medical team and obtaining medical advice [12]. Thus, internal medicine residents may be utilizing this modality as an important form of communication. Another potential explanation for the variation in use between these two specialties is the difference in environments and work flow. In the emergency department, the volume and acuity of patients are variable and unpredictable, leading to frequent interruptions of clinical tasks [15]. In contrast, internal medicine residents participate in work rounds in the hospital, admit patients on a predetermined schedule, and see patients in clinic in a lower acuity setting [16]. These circumstances might shape the frequency and type of smartphone usage of trainees. Due to its potential impact on quality improvement and patient safety, this is an area that should be further explored to understand the individual and systematic factors affecting these choices, as well as the associated outcomes for patients and providers.

We highlight the paucity of formal training regarding smartphone use in clinical settings. The lack of education and training in the usage of this technology is particularly important in light of the frequent use of functions that can result in breaches of patient privacy, including photos of patients and texting other providers [17]. Similarly, the lack of education regarding smartphone use can have a direct impact on quality of care and, potentially, patient safety. A recent study of common medical calculator apps found that only 43% of apps tested were 100% accurate, and in the rest, errors were often clinically significant [18]. The appropriate usage of such apps should be addressed in medical education by teaching students and residents how to recognize high-quality apps and how to use them responsibly in practice. In addition, medical institutions can recommend and/or develop apps that facilitate communication between providers while maintaining patient privacy.

This is the first study to present findings regarding residents’ smartphone use in two major academic centers. Although the study participants have almost completely adopted smartphone technology, their use is mostly for general features instead of patient-specific features. While we recognize that a relative increase of smartphone usage by the general public took place during the period encompassed by this survey, our study highlights a similar increase in smartphone usage by the United States medical trainees in academic centers [3,11]. Finally, prior training experiences in our survey were reported at a lower rate than described in a recent survey of University of Toronto medical students, suggesting that institutional variance may be present in learning opportunities [17].

The main limitation of our study is our descriptive analysis of a convenience sample of trainees in only two academic centers. In addition, recall bias could result in over-reporting or under-reporting of clinical use, especially of general features. However, we do note that our results can inform future studies of this highly relevant topic. Another limitation is that it some institutional variance in smartphone use is likely due to institutional culture and access to resources. Our response rate is comparable to those of prior studies, but response bias could be present, resulting in the under-representation of non-smartphone owners. Finally, as pointed out above, we surveyed two primary care specialties, and variance of use may be present within other specialties.

In conclusion, internal medicine residents and emergency medicine residents in two major academic medical centers have almost completely adopted the use of smartphones and clinical apps in their professional careers. The results of this study show a greater use of smartphones for general purposes in comparison to patient-specific functions. Respondents reported a perceived lack of education, training, and regulation of this technology as it relates to medical education, patient safety, and electronic medical information security. These results have implications for the ongoing integration of technology by medical professionals into patient care and raise issues for medical education training programs and chief information officers.

Notes

No potential conflict of interest relevant to this article was reported.

SUPPLEMENTARY MATERIAL

Audio recording of the abstract.