Articles

- Page Path

- HOME > J Educ Eval Health Prof > Volume 16; 2019 > Article

-

Research article

Medical students’ thought process while solving problems in 3 different types of clinical assessments in Korea: clinical performance examination, multimedia case-based assessment, and modified essay question -

Sejin Kim1

, Ikseon Choi1

, Ikseon Choi1 , Bo Young Yoon2

, Bo Young Yoon2 , Min Jeong Kwon2

, Min Jeong Kwon2 , Seok-jin Choi3

, Seok-jin Choi3 , Sang Hyun Kim2

, Sang Hyun Kim2 , Jong-Tae Lee4

, Jong-Tae Lee4 , Byoung Doo Rhee2*

, Byoung Doo Rhee2*

-

DOI: https://doi.org/10.3352/jeehp.2019.16.10

Published online: May 9, 2019

1Research and Innovation in Learning Lab, College of Education, The University of Georgia, Athens, GA, USA

2Department of Internal Medicine, Inje University College of Medicine, Busan, Korea

3Department of Radiology, Inje University College of Medicine, Busan, Korea

4Department of Preventive Medicine, Inje University College of Medicine, Busan, Korea

- *Corresponding email: bdrhee@inje.ac.kr

© 2019, Korea Health Personnel Licensing Examination Institute

This is an open-access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

-

Purpose

- This study aimed to explore students’ cognitive patterns while solving clinical problems in 3 different types of assessments—clinical performance examination (CPX), multimedia case-based assessment (CBA), and modified essay question (MEQ)—and thereby to understand how different types of assessments stimulate different patterns of thinking.

-

Methods

- A total of 6 test-performance cases from 2 fourth-year medical students were used in this cross-case study. Data were collected through one-on-one interviews using a stimulated recall protocol where students were shown videos of themselves taking each assessment and asked to elaborate on what they were thinking. The unit of analysis was the smallest phrases or sentences in the participants’ narratives that represented a meaningful cognitive occurrence. The narrative data were reorganized chronologically and then analyzed according to the hypothetico-deductive reasoning framework for clinical reasoning.

-

Results

- Both participants demonstrated similar proportional frequencies of clinical reasoning patterns on the same clinical assessments. The results also revealed that the three different assessment types may stimulate different patterns of clinical reasoning. For example, the CPX strongly promoted the participants’ reasoning related to inquiry strategy, while the MEQ strongly promoted hypothesis generation. Similarly, data analysis and synthesis by the participants were more strongly stimulated by the CBA than by the other assessment types.

-

Conclusion

- This study found that different assessment designs stimulated different patterns of thinking during problem-solving. This finding can contribute to the search for ways to improve current clinical assessments. Importantly, the research method used in this study can be utilized as an alternative way to examine the validity of clinical assessments.

- How do medical students actually think while they are solving clinical problems in testing situations? Understanding the kinds of thinking students are actually engaged in while taking tests is essential for validating and improving clinical assessments. While most research in this area has focused on the results of clinical assessments [1,2], little attention has been given to exploring students’ thought process during tests. Thus, the purpose of this study was to explore medical students’ cognitive patterns while solving clinical diagnostic problems in different types of clinical assessments and to compare those cognitive patterns across the different assessments.

- The objective structured clinical examination and clinical performance examination (CPX) have been widely used in clinical assessments as standardized ways to assess components of clinical competence beyond general knowledge, such as clinical performance and reasoning [1-4]. The modified essay question (MEQ) is also frequently used as a paper-based method to assess clinical reasoning [5,6]. In this study, in order to explore and compare medical students’ cognitive patterns when completing different types of clinical assessments, we first chose 2 representative types of clinical assessments commonly used in medical education: the CPX as a clinical performance test and the MEQ as a paper-based clinical reasoning test. In addition, we included an emerging alternative assessment, a multimedia case-based assessment (CBA) that was designed and developed by Choi et al. [7]. Therefore, this study was conducted to answer the following research questions: (1) Research question 1: What are medical students’ cognitive patterns of clinical diagnostic problem-solving in 3 different types of clinical assessments: the CPX, CBA, and MEQ? (2) Research question 2: How do medical students think differently to solve clinical diagnostic problems in 3 different types of clinical assessments: the CPX, CBA, and MEQ?

Introduction

- Ethics statement

- This study was approved by the Institutional Review Board of the University of Georgia (STUDY00001057). Written informed consent was obtained by the researchers.

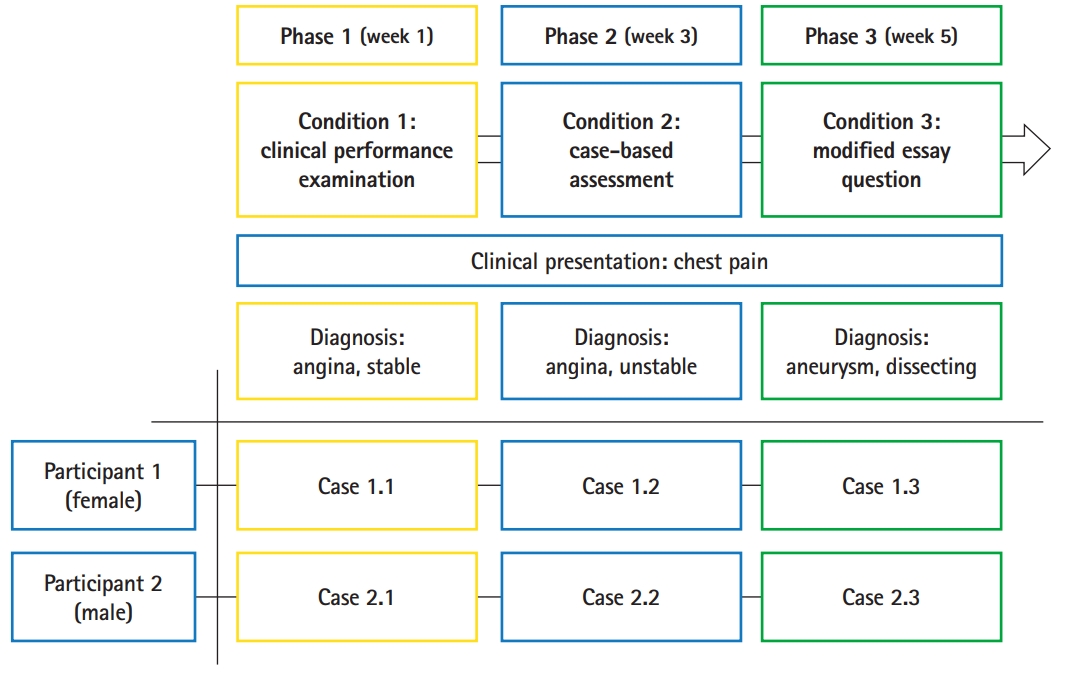

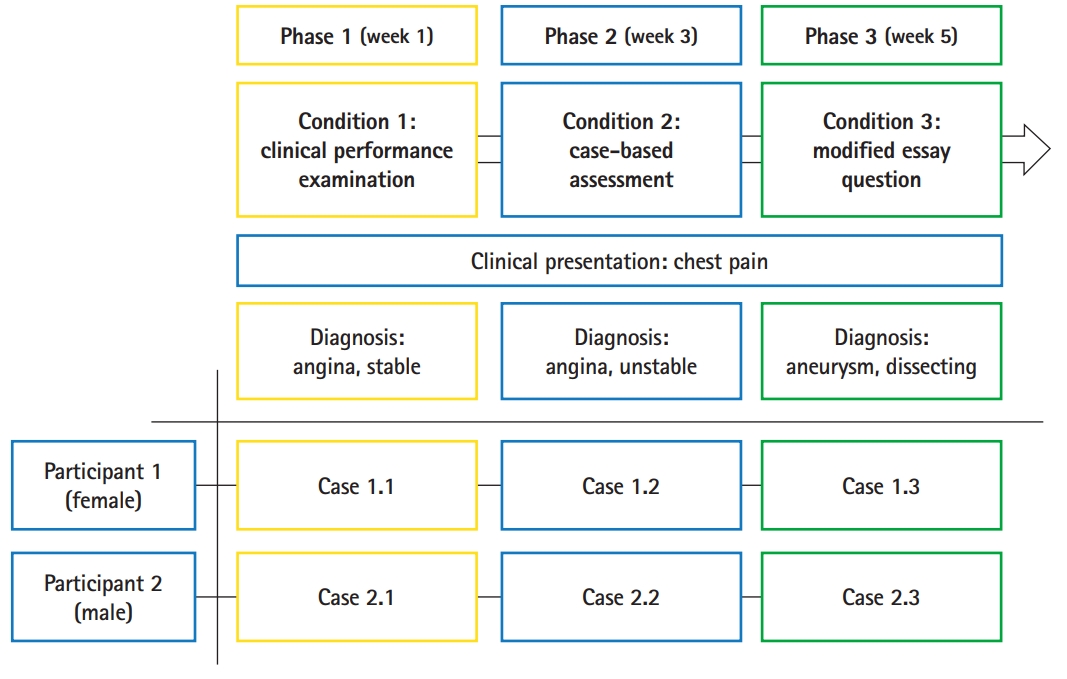

- Research design and intervention design

- A cross-case analysis was employed with 2 research participants and 3 different types of assessments in order to compare the differences in their cognitive patterns. The participants completed each type of assessment in the order of the condition numbers: condition 1 for the CPX, condition 2 for the CBA, and condition 3 for the MEQ (Fig. 1). The interval between each test was 2 weeks. Therefore, a total of 6 test-performance cases were collected and analyzed. The key features of the CPX, CBA, and MEQ used in this study are listed below:

- A 10-minute standardized patient-based clinical examination where a student interacts with a person who was recruited and trained to act as a real patient by simulating specific symptoms. The student’s clinical performance, including history-taking, physical examination, and diagnostic decisions, are assessed by an evaluation rubric.

- A video-based examination where a clinical case divided into 4 segments is provided and followed by a set of questions for each segment to assess a student’s clinical reasoning process and decision-making. The questions are related to cue identification, hypothesis generation, inquiry strategy, and data analysis for diagnosis. The test is delivered through an Internet browser on a computer, and the student cannot go back to the previous segment to change the answers. Each segment has a time limit (first segment, 6.5 minutes; second segment, 12 minutes; third segment, 12 minutes; and fourth segment, 17.5 minutes).

- A paper-based clinical examination where a clinical case is provided on paper along with a sequence of questions to assess a student’s clinical reasoning and decision-making. The test items include questions regarding cue identification, the diagnostic algorithm, hypothesis generation, and diagnosis. The MEQ in this study was composed of 2 segments, providing a sequence of scenarios on different pages with different stages of a clinical problem. Each segment had a 10-minute time limit for the student to solve all questions.

- A clinical presentation of chest pain was selected as the subject matter for all 3 assessments in the study. Chest pain is an essential clinical presentation regularly used in medical school curricula [8], which helped to control for participants’ lack of knowledge as a factor in problem-solving. This choice of subject matter also favored participants’ use of reasoning behaviors instead of hunting or guessing for the right answers. To prevent learning effects from taking 3 similar tests consecutively, the final diagnosis for each assessment was different—angina, stable; angina, unstable; and aneurysm, dissecting for the CPX, CBA, and MEQ, respectively—although chest pain was the chief complaint in each assessment.

- Participants

- Due to the high level of complexity and sensitivity in data collection and analysis, we aimed to recruit 2 fourth-year students from a medical school in Busan, Korea. In order to minimize unintended influence on participants’ thinking processes during the assessments from a lack of prior knowledge and CPX skills, purposeful sampling was employed. We first targeted fourth-year medical students; among a cohort of 109 fourth-year students, 17 who had experienced academic failure in previous semesters and 2 foreign students whose first language was not Korean were excluded. Next, we targeted the top 25% of the remining 90 students based on academic achievement, using their grade point average from the previous 3 years. The participants had completed the first semester of the fourth year by the time they participated in this study. This reduced the likelihood that participants simply guessed during problem-solving due to a lack of prior knowledge. Furthermore, in order to eliminate outliers in CPX performance, only those who had above-average previous CPX scores were targeted for recruitment. Finally, 1 female student and 1 male student were recruited to ensure gender balance in the study.

- Data collection

- This study utilized a video-based stimulated recall protocol for interviews that included 2 steps of data collection for each case. In step 1, the participants’ performances in each case was video-recorded. Then, each captured video was divided into 20 segments. For example, each segment of a 10-minute CPX video captured 30 seconds of the participant’s performance. Likewise, the participants’ CBA and MEQ performance videos were also divided into 20 segments, but the actual duration of each segment varied according to the total time of each performance. In step 2, each segment of the video was played back to the participants, and while watching their own performance on video, they were asked to recall cognitive occurrences by answering questions posed by the interviewer [9]. A list of retrospective interview questions used to elicit cognitive occurrences from the participants is provided in Table 1.

- Data analysis

- The interview data from the 6 cases (2 participants each with 3 test conditions) were transcribed and analyzed based on the hypothetico-deductive reasoning (HDR) model to identify the participants’ cognitive patterns of clinical diagnostic problem-solving. The HDR is one of the most suitable models for medical students to apply and practice their clinical reasoning to make a diagnosis [10,11]. The unit of analysis in this study was the smallest phrases or sentences from the participants’ narratives that represented a meaningful cognitive occurrence The transcribed narrative data were chunked by the unit of analysis as cognitive occurrences, guided by a naturalistic decision-making model (NDM) [9,12,13]. A sample data table with the unit of analysis (cognitive occurrence), NDM cognitive element category, and content of cognition is provided in Table 2.

- For further data analysis, each unit of the narrative data was chronologically reorganized in order to reconstruct the participants’ cognitive processes according to the order of the actual events that occurred during their performances. The reorganized data were then coded according to the HDR model, and any uncoded data were categorized as other cognitive occurrences. The category of other cognitive occurrences included cognitive behaviors that may not be authentic in real-world settings and may not necessarily occur when doctors encounter patients, but instead occur only in certain testing contexts. Because the other cognitive occurrences were not the main focus of this study, they were not subdivided or analyzed further. To obtain an overall picture of students’ cognitive patterns, however, the related statistics for other cognitive occurrences were still reported. A sample of a data analysis table with the reconstructed cognitive occurrences in chronological order, HDR coding indications, and other cognitive occurrence themes is presented in Table 3.

- Inter-rater reliability

- Inter-rater reliability was assessed in order to ensure the accuracy of the findings and the consistency of the analysis procedures. Two raters (the first author, an education expert, and the fourth author, a clinical expert) completed training sessions with the second author (an education expert). Then, the 2 raters coded the data independently according to the HDR model. Their independent coding results were compared, and any disagreements in the coding results between the 2 raters were identified and negotiated. The inter-rater reliability results for the initial and final coding are provided in Table 4.

Methods

CPX

CBA

MEQ

- Identification of clinical reasoning patterns and other cognitive occurrences in each condition (research question 1)

- In order to answer the first research question, the 2 participants’ cognitive patterns were compared. The proportional data (%) of each type of cognitive occurrences in each case, instead of the actual frequencies, were used in this study because each participant’s cognitive processes were based on their narrative data, the lengths of which were not equal. As shown in Table 5, 79.7% and 69.4% of the cognitive occurrences in participant 1 (female) and 2 (male), respectively, were identified as clinical reasoning during the CPX condition, while 20.3% and 30.6% of the cognitive occurrences, respectively, were found to be other cognitive occurrences. Likewise, 61.4% and 86.6% of the cognitive occurrences in participant 1 and 2, respectively, were clinical reasoning during the CBA condition, and 38.6% and 13.4% of the cognitive occurrences, respectively, were other cognitive occurrences. During the MEQ condition, 63.7% and 67.0% of the cognitive occurrences in participant 1 and 2, respectively, were identified as clinical reasoning, while 36.3% and 33.0% of the cognitive occurrences, respectively, were found to be other cognitive occurrences. As indicated earlier, the other cognitive occurrences consisted of various kinds of inauthentic thinking. The further analysis focused primarily on the cognitive occurrences classified as clinical reasoning.

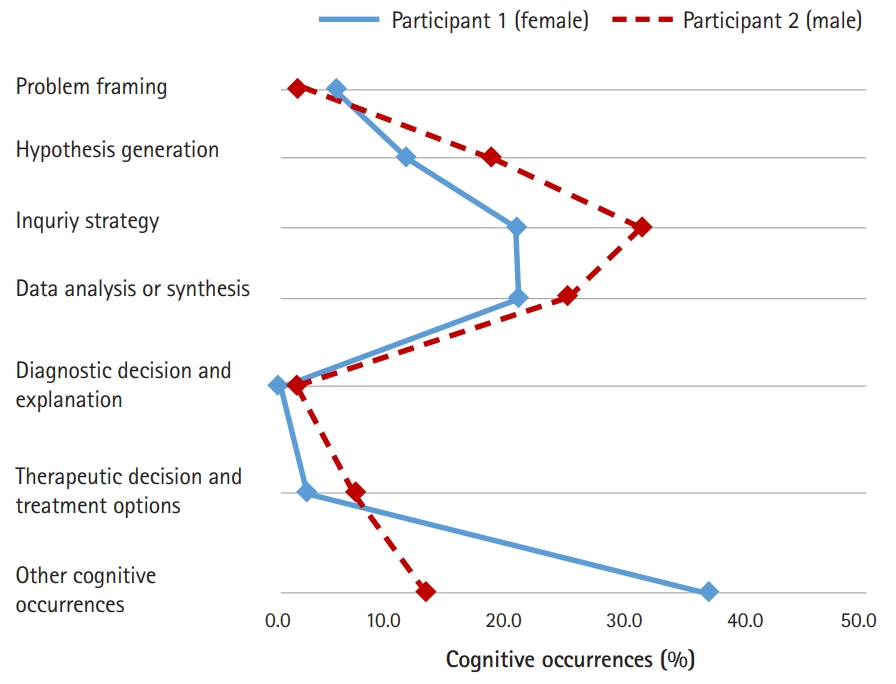

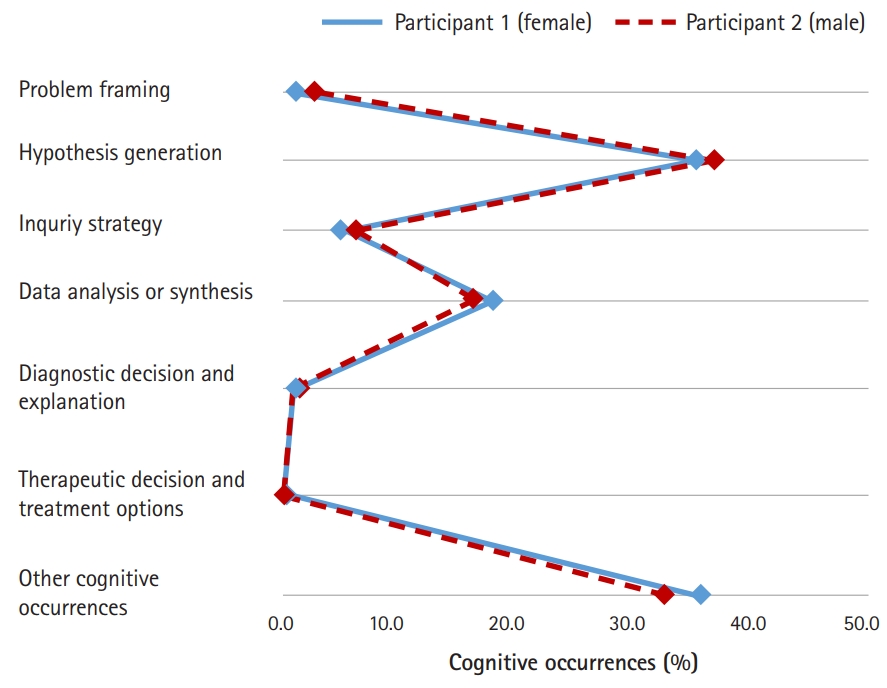

- In order to explore the similarities of the proportional patterns of clinical reasoning between the 2 participants in each condition, line graphs were used to represent the results of Table 5 graphically. As shown in Fig. 2, a similar proportional pattern of clinical reasoning between both participants was evident in the CPX condition. In general, more clinical reasoning was observed in participant 1 (female) than in participant 2 (male). The inquiry strategy phase (39.8% for participant 1 and 28.9% for participant 2) was the most frequent clinical reasoning process for both participants, and the data analysis or synthesis phase (20.3% for participant 1 and 17.9% for participant 2) was the next most frequent.

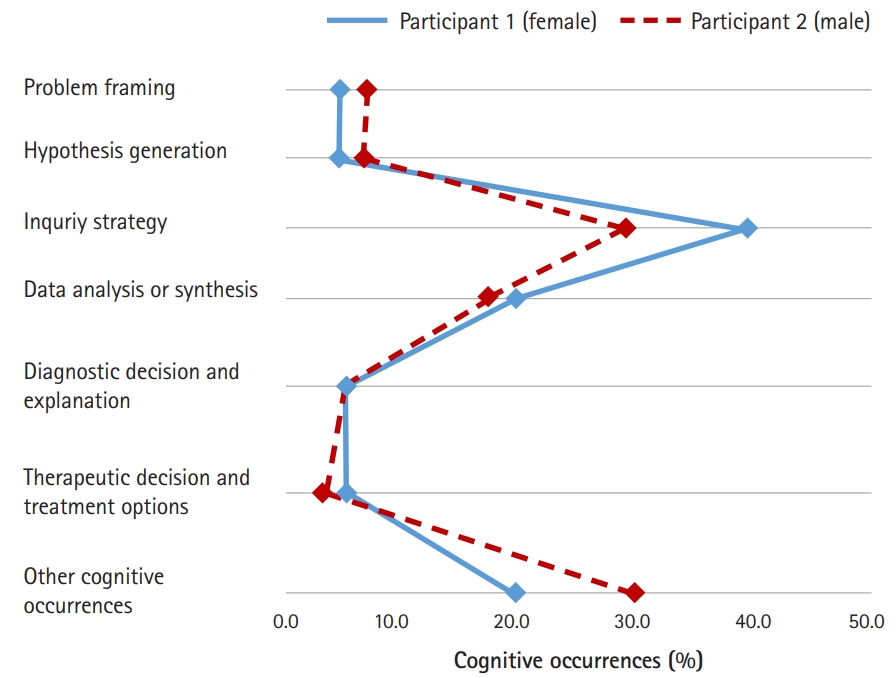

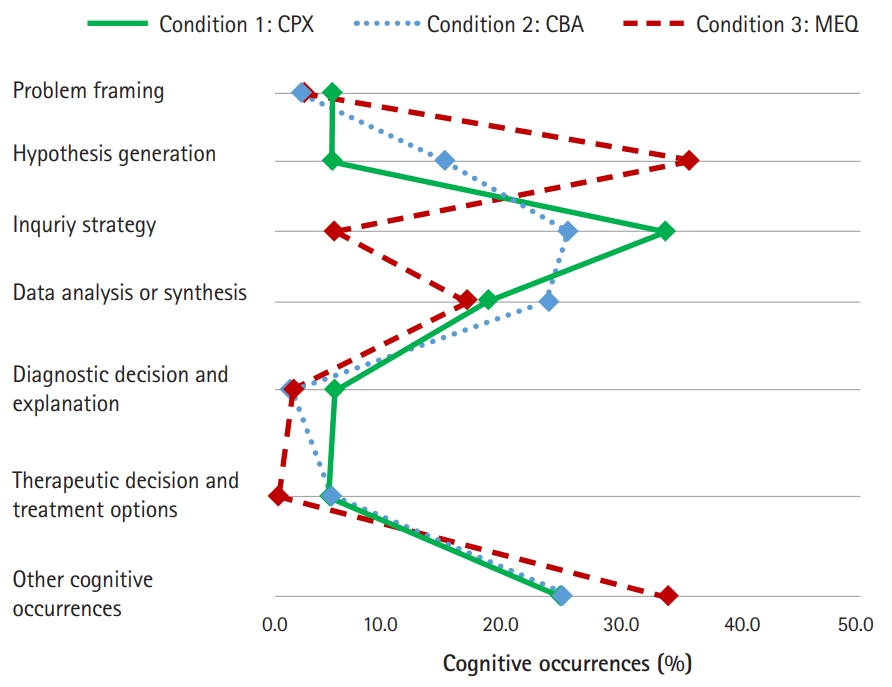

- Likewise, similar proportional patterns of clinical reasoning were observed between the 2 participants in the CBA condition (Fig. 3). The inquiry strategy phase (21.0% for participant 1 and 32.3% for participant 2) and the data analysis or synthesis phase (21.5% for participant 1 and 25.4% for participant 2) were the 2 most frequent types of cognitive occurrences.

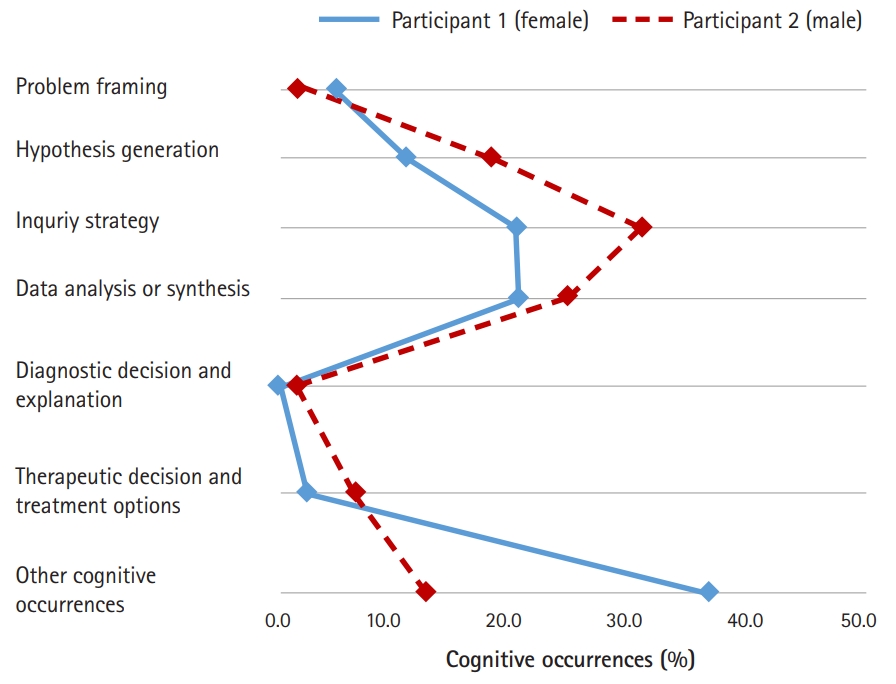

- Similarly, both participants’ proportional patterns of clinical reasoning were almost identical in the MEQ condition, as presented in Fig. 4. The hypothesis generation phase (36.8% for participant 1 and 38.0% for participant 2) was the most frequent clinical reasoning process for both participants, and the data analysis or synthesis phase (18.1% for participant 1 and 16.8% for participant 2) was the next most frequent.

- Differences in cognitive patterns among the 3 different conditions (research question 2)

- In order to explore the differences among the participants’ cognitive patterns facilitated by each condition, the average percentages of the types of cognitive occurrences from both participants across all conditions were compared. The average percentages for clinical reasoning were 74.6% (CPX), 74.0% (CBA), and 65.4% (MEQ), whereas the average percentages for other cognitive occurrences across all conditions were 25.4% (CPX), 26.0% (CBA), and 34.6% (MEQ) (see Table 5 for the detailed average percentages of each HDR process and other cognitive occurrences).

- The average values of the 2 participants’ proportional patterns of clinical reasoning in the 3 different assessment conditions are demonstrated in Fig. 5. In the MEQ condition, hypothesis generation was observed more frequently than any other condition, while more inquiry strategies were observed in the CPX and CBA conditions than in the MEQ condition. Clinical reasoning related to data analysis and synthesis was observed in all 3 conditions at a similar level. Clinical reasoning related to problem framing, diagnostic decision and explanation and therapeutic decision and treatment options was observed infrequently in all 3 conditions.

Results

- There are 2 key findings of this study. First, for each condition, the cognitive patterns of the 2 participants were similar to each other (Figs. 2–4). This implies that each assessment type may stimulate thinking in a consistent manner across multiple test-takers. The first finding is the precondition for the second finding, which is that the three different assessment types stimulated different aspects of clinical reasoning. As shown in Fig. 5, the CPX promoted reasoning involving inquiry strategy, but it rarely promoted hypothesis generation. By design, one of the key features of the CPX is to provide a unique situation where test-takers freely interact with standardized patients by asking questions during the diagnostic process [1,3]. Thus test-takers mostly take advantage of being engaged in dynamic inquiry activities during the CPX, although they may not have enough time to think carefully in order to formulate hypotheses. In contrast, the MEQ strongly promoted hypothesis generation, but not inquiry strategy. The MEQ includes an item that requests test-takers to articulate hypotheses for a given situation, which directly stimulates their engagement in reasoning related to hypothesis generation. Due to the static nature of the paper-based mode, the MEQ does not have a dynamic mechanism through which test-takers could generate a list of questions to obtain corresponding patient information. Interestingly, the CBA strongly promoted inquiry strategy at a level close to that of the CPX, while the CBA promoted hypothesis generation at a level higher than the CPX, but lower than the MEQ. The key features of the CBA include a series of consecutive video clips divided by critical decision points where test-takers are given clips sequentially and asked to reason at that given particular moment. With the multimedia feature delivering richer situational information in an interactive mode, the CBA might be able to provide test-takers opportunities to practice both inquiry strategies and hypothesis generation, which were not promoted simultaneously by the other assessment types.

- Four limitations of this study can be noted. First, generalization of the findings of the study is limited by the small sample size of participants and the inclusion of a single clinical presentation. Secondly, the collected data were based on the participants’ recalled narratives, which might lopsidedly reflect positive outcomes due to their status as high-performing medical students; furthermore, the students might have missed some important cognitive occurrences when they were watching their performance videos. Thirdly, a specific test item for the therapeutic decision and treatment options was not included in the MEQ used in this study; hence, this particular cognitive process was not detected in the MEQ for either participant. Lastly, test-retest bias may have affected our findings, as 3 different tests with similar content were consecutively administered to each participant.

- In conclusion, we argue that different assessment designs stimulate different patterns of thinking; thus, they may measure different aspects of cognitive performance. Although each assessment was designed with the same content (e.g., chest pain) and the same goal (e.g., assessing clinical reasoning), the combination of different delivery modes and the different ways that each test item is designed may cause variation in students’ actual thought processes while taking the test. It is essential to strive to understand which aspects of cognitive performance are actually measured in different assessments in order to improve such assessments. Accordingly, we recommend that researchers consider the research method employed in this study, capturing and analyzing data on cognitive occurrences, as an alternative method for examining the validity of assessment instruments, in particular for construct validity. For example, our method allowed us to discover discrepancies between participants’ observed performance and their actual thought process for the CPX. While the participants were using a stethoscope during the physical examination part, their attention was not directed towards the task at hand; instead, they focused on matters such as next steps in the physical examination, and information-retrieving for the patient education and counseling part. These inattentive actions, coded as other cognitive occurrences in this study, may not be detected through expert observation that uses a performance checklist in the current design of the CPX. Thus, through the method utilized in this study, it is possible to check whether a certain assessment instrument is valid for assessing the intended aspects of cognitive performance.

Discussion

-

Authors’ contributions

Conceptualization: IC, BDR, JL. Data curation: SK, IC, BYY. Formal analysis: SK, IC, BYY, MJK. Funding acquisition: JL, IC, BDR, BYY. Methodology: IC, SK, BYY, SJC, SHK, JL, BDR. Project administration: BDR, IC, JL. Visualization: IC, SK, BDR. Writing–original draft: SK, IC. Writing–review & editing: IC, SK, BYY, BDR, JL, SJC, MJK, SHK.

-

Conflict of interest

No potential conflict of interest relevant to this article was reported.

-

Funding

This work was supported in part by the University of Georgia College of Education under the 2017 Faculty Research Program and by Inje University under grant no. 20170028.

Article information

Acknowledgments

Supplementary materials

- 1. Cho JJ, Kim JY, Park HK, Hwang IH. Correlation of CPX scores with the scores on written multiple-choice examinations on the certifying examination for family medicine in 2009 to 2011. Korean J Med Educ 2011;23:315-322. https://doi.org/10.3946/kjme.2011.23.4.315 ArticlePubMedPMCPDF

- 2. Hwang JY, Jeong HS. Relationship between the content of the medical knowledge written examination and clinical skill score in medical students. Korean J Med Educ 2011;23:305-314. https://doi.org/10.3946/kjme.2011.23.4.305 ArticlePubMedPMCPDF

- 3. Harden RM, Stevenson M, Downie WW, Wilson GM. Assessment of clinical competence using objective structured examination. Br Med J 1975;1:447-451. https://doi.org/10.1136/bmj.1.5955.447 ArticlePubMedPMC

- 4. Harden RM. Revisiting ‘assessment of clinical competence using an objective structured clinical examination (OSCE)’. Med Educ 2016;50:376-379. https://doi.org/10.1111/medu.12801 ArticlePubMed

- 5. Irwin WG, Bamber JH. The cognitive structure of the modified essay question. Med Educ 1982;16:326-331. https://doi.org/10.1111/j.1365-2923.1982.tb00945.x ArticlePubMed

- 6. Palmer EJ, Devitt PG. Assessment of higher order cognitive skills in undergraduate education: modified essay or multiple choice questions?: research paper. BMC Med Educ 2007;7:49. https://doi.org/10.1186/1472-6920-7-49 ArticlePubMedPMCPDF

- 7. Choi I, Hong YC, Park H, Lee Y. Case-based learning for anesthesiology: enhancing dynamic decision-making skills through cognitive apprenticeship and cognitive flexibility. In: Luckin R, Goodyear P, Grabowski B, Puntambeker S, Underwood J, Winters N, editors. Handbook on design in educational technology. New York (NY): Routledge; 2013. p. 230-240.

- 8. Hwang K, Lee YM, Baik SH. Clinical performance assessment as a model of Korean medical licensure examination. Korean J Med Educ 2001;13:277-287. https://doi.org/10.3946/kjme.2001.13.2.277 ArticlePDF

- 9. Klein GA, Calderwood R, Macgregor D. Critical decision method for eliciting knowledge. IEEE Trans Syst Man Cybern 1989;19:462-472. https://doi.org/10.1109/21.31053 Article

- 10. Barrows HS. Problem-based learning applied to medical education. Springfield (IL): Southern Illinois University; 1994.

- 11. Barrows HS, Tamblyn RM. Problem-based learning: an approach in medical education. New York (NY): Springer Publishing Company; 1980.

- 12. Crandall B, Klein G, Hoffman RR. Working minds: a practitioner’s guide to cognitive task analysis. Cambridge (MA): The MIT Press; 2006.

- 13. Hoffman RR, Crandall B, Shadbolt N. Use of the critical decision method to elicit expert knowledge: A case study in the methodology of cognitive task analysis. Hum Factors 1998;40:254-276. https://doi.org/10.1518/001872098779480442 Article

References

Figure & Data

References

Citations

- Future directions of online learning environment design at medical schools: a transition towards a post-pandemic context

Sejin Kim

Kosin Medical Journal.2023; 38(1): 12. CrossRef - Clinical Reasoning Training based on the analysis of clinical case using a virtual environment

Sandra Elena Lisperguer Soto, María Soledad Calvo, Gabriela Paz Urrejola Contreras, Miguel Ángel Pérez Lizama

Educación Médica.2021; 22(3): 139. CrossRef - Newly appointed medical faculty members’ self-evaluation of their educational roles at the Catholic University of Korea College of Medicine in 2020 and 2021: a cross-sectional survey-based study

Sun Kim, A Ra Cho, Chul Woon Chung

Journal of Educational Evaluation for Health Professions.2021; 18: 28. CrossRef

KHPLEI

KHPLEI

PubReader

PubReader ePub Link

ePub Link Cite

Cite